Activities

Activity 1: Application of Deep Neural Networks (DNN) to improve iterative decoding algorithms for LDPC codes

Subactivity 1.1: Analysis of Multi-Layer Perceptron Neural Network (MLPNN) and Recursive Neural Network (RNN) in the context of iterative decoding of LDPC codes

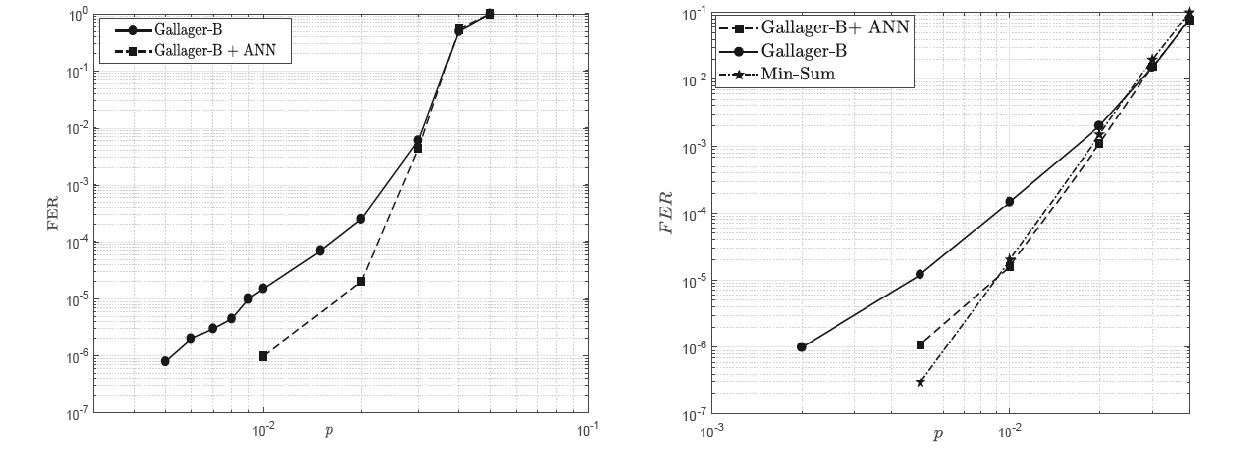

We analyzed the application of Deep Neural Networks (DNN) to improve iterative decoding algorithms for LDPC codes. We have examined the possibility of using single hidden-layer feed-forward neural networks (SLFNs) and two-hidden-layer feed-forward neural networks (TLFNs) in decoding linear block codes. The analysis is published in the conference paper, presented at 29th Telecommunications Forum (TELFOR 2021).

Subactivity 1.2: Performance analysis of belief propagation decoder optimized by using Deep Neural Networks

We have shown that current knowledge of TLFNs and SLFNs cannot guarantee the performance of maximum likelihood (ML) decoding, with significantly lower complexity than trellis-based decoding. However, TLFNs can be an efficient post-processing block in decoding LDPC codes and lower error floors with a small complexity penalty. Future work will involve a more elaborate examination of employing ANNs in decoding practically significant LDPC codes and the possibility of involving deep neural networks in the decoding process. The analysis is presented in the paper, published in Telfor Journal.

Performance of ANN-based decoder

Activity 2: Optimization of gradient bit flipping (GDBF) decoder

Subactivity 2.1: Identification of machine learning (ML) techniques suitable for application in bit-flipping based decoders

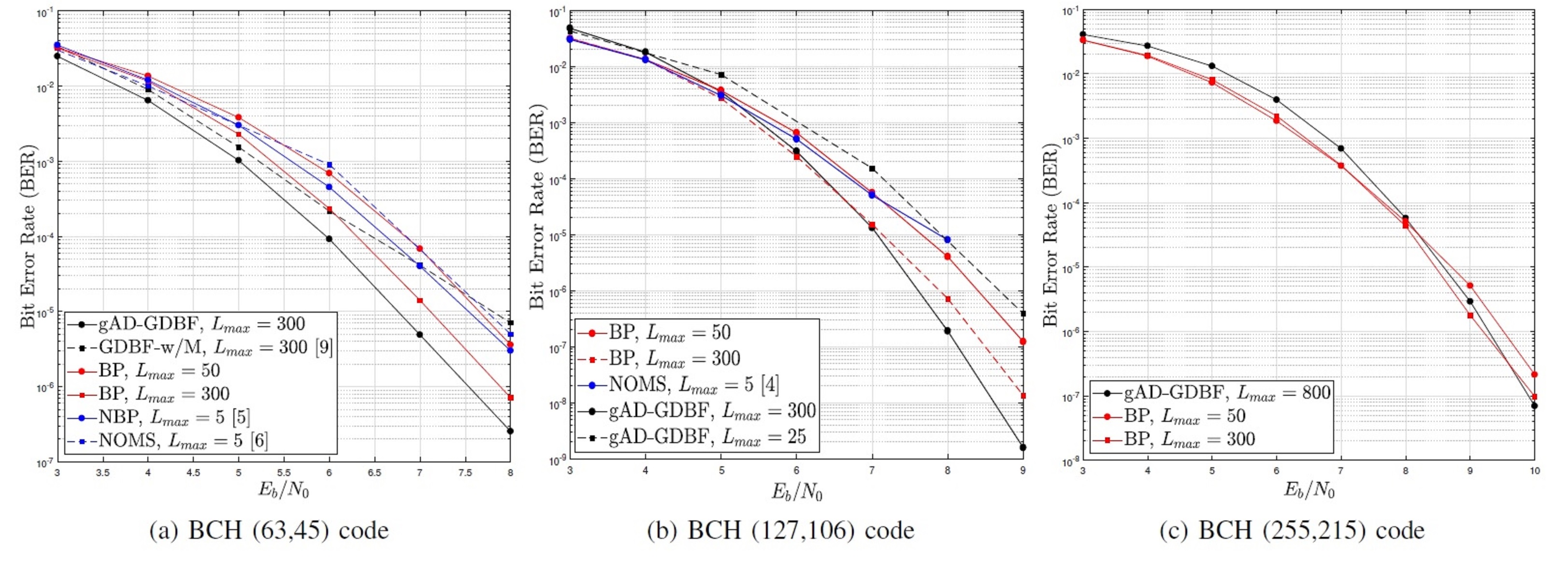

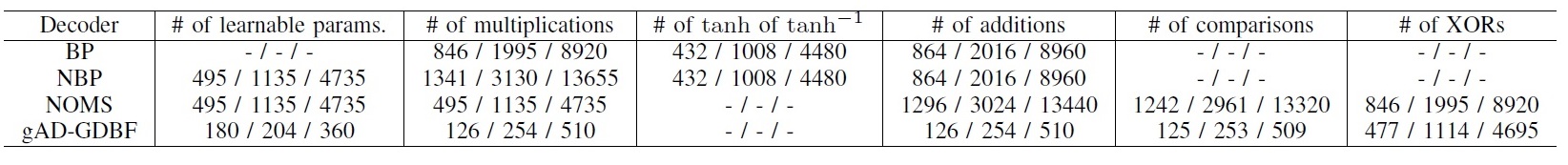

It has been shown that the adaptation method should be based on the genetic optimization algorithm, that is incorporated into the recently proposed Gradient Descent Bit-Flipping Decoding with Momentum (GDBF-w/M). We proposed generaliyed adaptive diversity gradient-descent bit flipping (AD-GDBF) decoder, where genetic algorithm is applied to optimize a set of learnable parameters can dramatically improve the performance of short Bose-Chaudhuri-Hocquenghem (BCH) codes. For the same number of decoding iterations, this type of decoder outperforms the belief-propagation decoder in terms of bit error rate and at the same time reduces the decoding complexity significantly. This result was presented in a conference paper, published at the International Symposium on Topics in Coding (ISTC 2023).

Performance of gAD-GDBF decoder

Complexity of gAD-GDBF decoder

Subactivity 2.2: Performance analysis of probabilistic GDBF decoder opimized by using appropriate ML technique

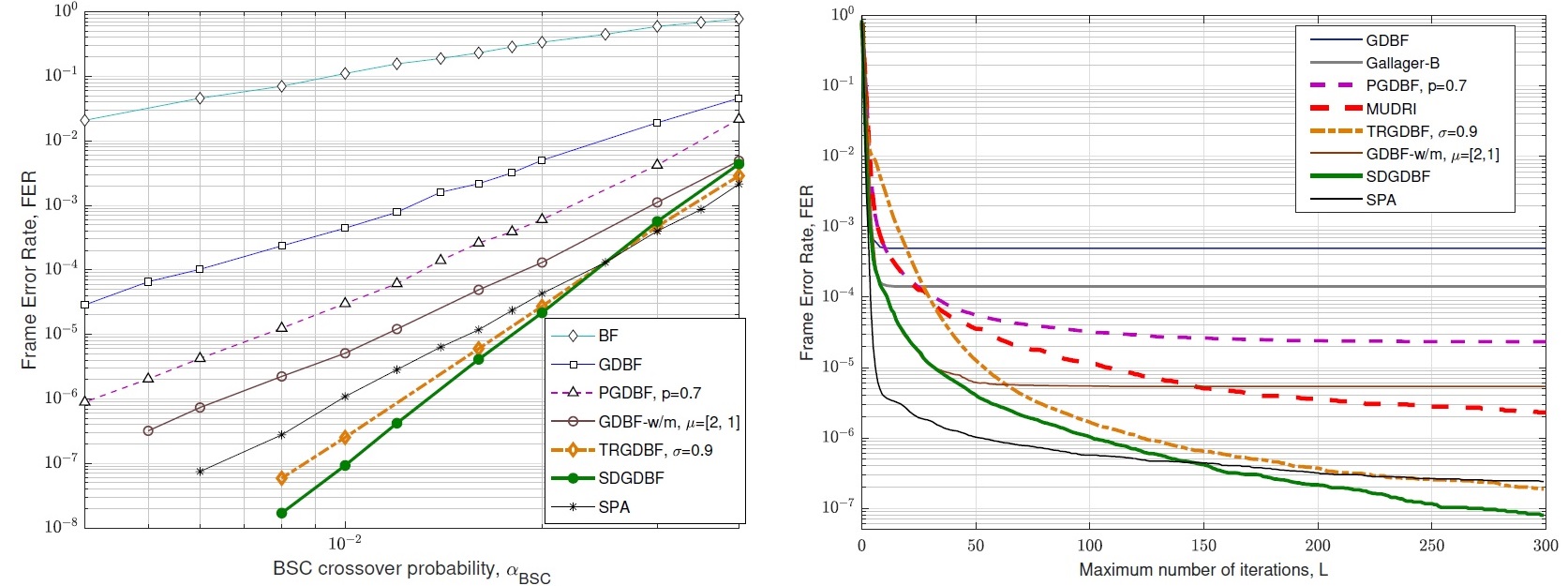

By combining the concept of momentum and the graph properties of the typical trapping sets, we have proposed a deterministic modification of the GDBF algorithm. In previously proposed GDBF-based algorithms, the flipping decisions were based on the energy function that takes into account the number of unsatisfied parity checks connected to the corresponding variable node. In this paper, we analyze some typical error patterns where the variable node is erroneous, but the connected parity checks are satisfied (and vice versa). The proposed modification of the flipping rule takes into account these parity checks, which are no more assumed as valid indicators if the corresponding VN is correct. We have shown that the most significant performance improvement is obtained if the proposed modification is successively applied to the received word. We have also proposed a more general framework based on deterministic re-initializations of the decoder input in multiple decoding attempts. The main results were published in the journal Entropy, indexed in the JCR list.

Performance of SDGDBF decoder